Kicking off with AWS ML services for big data, this article delves into the innovative solutions provided by Amazon Web Services to handle and analyze large datasets efficiently. From machine learning services to data warehousing, explore how AWS is transforming the big data landscape.

Overview of AWS ML services for big data

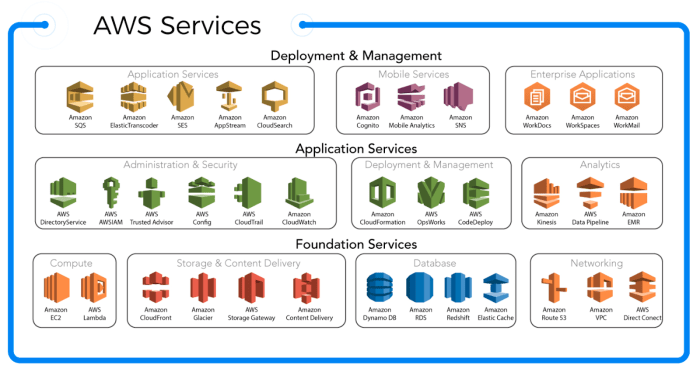

AWS offers a range of machine learning services that are specifically designed to handle big data efficiently. These services can be seamlessly integrated into existing big data workflows, providing organizations with powerful tools to extract valuable insights from their data. Let’s explore some key aspects of AWS ML services for big data.

Different Machine Learning Services Offered by AWS

- Amazon SageMaker: A fully managed service that enables developers and data scientists to build, train, and deploy machine learning models quickly.

- Amazon Rekognition: Provides deep learning-based image and video analysis for applications in various industries.

- Amazon Comprehend: A natural language processing service that can extract insights and relationships from unstructured text.

Integration into Big Data Workflows, AWS ML services for big data

- AWS ML services can be seamlessly integrated into big data workflows using services like AWS Glue for data preparation and AWS Lambda for serverless computing.

- These services can scale automatically to handle large volumes of data, making it easier to process and analyze big data efficiently.

Use Cases and Industries

- Financial Services: AWS ML services are used for fraud detection, risk assessment, and personalized customer experiences in the banking and insurance sectors.

- Retail: Retailers leverage AWS ML services for demand forecasting, recommendation engines, and inventory optimization.

- Healthcare: AWS ML services are utilized for patient diagnosis, predictive analytics, and personalized medicine in the healthcare industry.

Amazon SageMaker for big data analytics: AWS ML Services For Big Data

Amazon SageMaker is a powerful tool within the AWS ecosystem that offers a wide range of features and capabilities specifically tailored for big data analytics. From data preprocessing to model training and deployment, SageMaker provides a comprehensive solution for handling large datasets and deriving valuable insights.

Features and Capabilities

- Built-in algorithms: SageMaker offers a diverse set of built-in algorithms that are optimized for big data processing, allowing users to train models efficiently on large datasets.

- Scalability: With SageMaker, users can easily scale their machine learning workflows to handle massive amounts of data without worrying about infrastructure management.

- Data labeling: SageMaker provides tools for data labeling, which is crucial for training machine learning models on big data sets.

- Automatic model tuning: The platform offers automatic model tuning capabilities that optimize hyperparameters to improve model performance on large datasets.

Comparison with Other AWS ML Services

- Scalability: Amazon SageMaker stands out for its scalability, allowing users to seamlessly scale their machine learning workflows to process big data efficiently.

- Performance: Compared to other AWS ML services, SageMaker offers high performance for big data analytics, enabling faster model training and inference on large datasets.

- Integration: SageMaker integrates seamlessly with other AWS big data tools such as Amazon S3 and Glue, providing a holistic solution for big data processing and analysis.

Best Practices

- Use Amazon SageMaker in conjunction with Amazon S3 for storing large datasets and Glue for data preparation to streamline the machine learning workflow.

- Take advantage of SageMaker’s built-in algorithms and automatic model tuning features to optimize model performance on big data sets.

- Monitor resource utilization and optimize the infrastructure configuration to ensure efficient processing of large volumes of data.

AWS Glue for data preparation

AWS Glue plays a crucial role in preparing big data for machine learning models by simplifying the process of cleaning and transforming large datasets. This service automates the cumbersome task of extracting, transforming, and loading data, allowing data engineers to focus on more critical aspects of the data preparation process.

Step-by-step guide on using AWS Glue

- Create a new AWS Glue job by defining the data source and target.

- Configure the job properties, including the data transformation logic and output format.

- Run the job to execute the data cleaning and transformation processes.

- Monitor the job execution and review the output data for accuracy.

Benefits of using AWS Glue

- Automation: AWS Glue automates the data preparation process, reducing manual effort and potential errors.

- Scalability: It can handle large volumes of data efficiently, making it suitable for big data projects.

- Cost-effective: By streamlining data preparation tasks, AWS Glue can help reduce operational costs associated with manual data cleaning and transformation.

- Integration: AWS Glue seamlessly integrates with other AWS services, enabling a comprehensive data processing pipeline within the AWS ecosystem.

Amazon Redshift for data warehousing

Amazon Redshift is a fully managed data warehousing service provided by AWS, designed to handle large-scale data analytics. It allows users to store and analyze vast amounts of data in real-time, making it a powerful tool for organizations dealing with big data.

Integration with AWS ML services

Amazon Redshift seamlessly integrates with AWS ML services, enabling organizations to perform advanced analytics and machine learning on their data warehouse. By leveraging the scalability and performance of Redshift, users can run complex queries and extract valuable insights from their data.

- Amazon Redshift Spectrum: This feature allows users to run queries on data stored in Amazon S3, extending the capabilities of Redshift to analyze data across different storage platforms.

- Integration with Amazon SageMaker: By connecting Amazon Redshift with SageMaker, organizations can build, train, and deploy machine learning models directly on their data warehouse, streamlining the process of deriving insights from data.

- Real-time data analytics: With Amazon Redshift, organizations can analyze data in real-time, enabling them to make faster decisions and respond to changing business needs promptly.

Real-world examples

Amazon Redshift has been widely adopted by organizations across various industries for big data warehousing and analytics. For instance, Airbnb uses Amazon Redshift to analyze large volumes of data generated by its platform, helping them optimize pricing strategies and improve user experience.

Amazon Redshift provides the scalability and performance needed to handle the growing volume of data generated by modern businesses, making it a valuable tool for organizations looking to derive actionable insights from their data.

In conclusion, AWS ML services offer a powerful toolkit for organizations looking to harness the full potential of big data analytics. With services like Amazon SageMaker, AWS Glue, and Amazon Redshift, businesses can streamline their data processing and drive actionable insights. Stay ahead of the curve with AWS ML services for big data.

When it comes to big data integration with AWS services, businesses can leverage the power of cloud computing to handle large volumes of data efficiently. By utilizing services such as Amazon S3 and Amazon Redshift, companies can seamlessly process and analyze massive datasets in real-time. Learn more about big data integration with AWS services to stay ahead in the era of data-driven decision making.

Apache Spark on AWS provides a powerful framework for big data processing and analytics. With the scalability and flexibility of AWS, organizations can easily deploy and manage Apache Spark clusters to handle complex data processing tasks. Explore the benefits of running Apache Spark on AWS for enhanced data processing capabilities and improved decision-making processes.

For businesses looking for cost-efficient AWS storage services, options like Amazon EBS and Amazon Glacier offer reliable and scalable storage solutions at affordable prices. By optimizing storage costs and performance, companies can effectively manage their data storage needs without breaking the bank. Discover how to leverage cost-efficient AWS storage services to streamline operations and boost productivity.