Building AI models in SageMaker sets the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail and brimming with originality from the outset. In this guide, we will delve into the intricacies of developing AI models in SageMaker, exploring its features, benefits, and best practices.

As we unravel the process of setting up SageMaker, preparing data, training models, and deploying them, you will gain valuable insights that will empower you to harness the full potential of this powerful tool for AI development.

Introduction to AI models in SageMaker

AI models in SageMaker refer to the process of developing and deploying artificial intelligence models using Amazon SageMaker, a cloud-based machine learning platform. SageMaker offers various tools and capabilities that streamline the entire AI model development lifecycle, from data preparation and model training to deployment and monitoring.

Overview of SageMaker’s capabilities, Building AI models in SageMaker

- One-click training and deployment: SageMaker provides a user-friendly interface for building, training, and deploying AI models with just a few clicks.

- Built-in algorithms: The platform offers a wide range of built-in algorithms and pre-built models that can be easily customized to suit specific use cases.

- Scalability and flexibility: SageMaker allows users to scale their AI projects based on demand, making it suitable for both small-scale experiments and large-scale production deployments.

Importance of SageMaker in AI development

SageMaker plays a crucial role in accelerating the AI development process by providing a unified platform that simplifies complex tasks such as data preprocessing, model training, hyperparameter tuning, and deployment. This helps data scientists and developers focus more on the actual AI model building and experimentation, rather than getting bogged down by infrastructure and technical details.

Benefits of using SageMaker for AI model building

- Cost-effective: SageMaker offers a pay-as-you-go pricing model, allowing users to only pay for the resources they consume, making it a cost-effective solution for AI development.

- Time-saving: The automation and optimization features in SageMaker help reduce the time required for model development and deployment, enabling faster go-to-market strategies.

- Collaboration and teamwork: SageMaker supports collaboration among team members, making it easy to share datasets, models, and experiments, fostering a more collaborative and productive work environment.

Setting up SageMaker for AI model development

Setting up SageMaker for AI model development is a crucial step in creating efficient and accurate AI models. By following best practices and optimizing settings, developers can streamline the process and maximize the potential of their models.

Configuring SageMaker Environment

- Access the Amazon SageMaker console and create a new notebook instance.

- Choose the appropriate instance type based on your computational needs.

- Set up the necessary permissions and roles to access data and resources.

- Install any additional libraries or dependencies required for your AI model development.

Tools and Resources in SageMaker

- Utilize Jupyter notebooks for interactive development and experimentation.

- Take advantage of built-in algorithms and model templates for quick prototyping.

- Use SageMaker Debugger for real-time monitoring and debugging of training processes.

- Explore SageMaker Studio for a comprehensive integrated development environment.

Optimizing SageMaker Settings

- Adjust hyperparameters to fine-tune model performance and accuracy.

- Optimize data input and output configurations for efficient data processing.

- Leverage SageMaker Automatic Model Tuning for automated hyperparameter optimization.

- Monitor resource utilization and adjust instance types for cost-effectiveness.

Data preparation for AI models in SageMaker

Preparing data for AI model training in SageMaker is a crucial step that directly impacts the model’s performance and accuracy. Proper data handling ensures that the model learns effectively from the information provided, leading to more reliable predictions and insights.

Data Cleaning and Preprocessing Techniques

- One common technique is handling missing values by either removing rows or columns with missing data or imputing values based on statistical measures.

- Normalization and standardization are essential for ensuring all features have a similar scale, preventing certain features from dominating the model training process.

- Feature engineering involves creating new features or transforming existing ones to improve the model’s ability to extract patterns from the data.

- Removing outliers can help improve the model’s generalization capability by reducing the impact of noisy data points.

Importance of High-Quality Data

- High-quality data is crucial for training accurate and reliable AI models in SageMaker. Poor-quality data can lead to biased predictions and inaccurate insights.

- Clean and well-prepared data ensures that the model can learn meaningful patterns and relationships, leading to better decision-making capabilities.

- Data quality directly impacts the model’s performance, making it essential to invest time and effort in data preparation and cleaning.

Tips for Handling Large Datasets Effectively

- Consider using Amazon S3 for storing and accessing large datasets in SageMaker, as it provides scalable storage solutions.

- Utilize SageMaker’s distributed computing capabilities to process and train models on large datasets efficiently.

- Use data shuffling techniques to randomize the order of data samples during training, preventing the model from memorizing the sequence of data points.

- Batch processing can help in training models on large datasets by dividing the data into smaller batches for efficient processing.

Model training and evaluation in SageMaker

Training AI models in SageMaker involves several steps to ensure optimal performance and accuracy. Once the data is prepared and the environment is set up, the model training process can begin. During this phase, the model learns from the data to make predictions or classifications. Evaluation is crucial to assess the performance of the trained model and make improvements if necessary.

Model Training Steps

- Define the model architecture and choose the appropriate algorithm based on the problem at hand.

- Split the data into training and validation sets to assess the model’s performance.

- Configure the training job in SageMaker by specifying the instance type, hyperparameters, and other settings.

- Start the training job and monitor the progress to ensure the model is learning effectively.

Supported Algorithms and Frameworks

- SageMaker supports a wide range of algorithms and frameworks for training AI models, including XGBoost, Linear Learner, K-Means Clustering, and TensorFlow.

- Each algorithm or framework has its own strengths and weaknesses, so it’s essential to choose the most suitable one for the specific task.

Hyperparameter Tuning and Model Evaluation

- Hyperparameter tuning in SageMaker involves automatically searching for the best combination of hyperparameters to optimize the model’s performance.

- Strategies like grid search or random search can be used to find the optimal hyperparameters for the model.

- Model evaluation is done using metrics like accuracy, precision, recall, F1 score, and ROC-AUC to assess the model’s performance on the validation set.

Model Performance Metrics

- During training, it’s important to track metrics such as loss function value, accuracy, and validation error to monitor the model’s progress.

- Other metrics like confusion matrix, ROC curve, and precision-recall curve can provide deeper insights into the model’s performance and help identify areas for improvement.

Deployment of AI models from SageMaker

Deploying AI models from SageMaker is a crucial step in the machine learning workflow. It involves making the trained model available for inference or prediction on new data. This process ensures that the model can be used to make real-time decisions or automate tasks based on the learnings from the training phase.

Deployment Process in SageMaker

To deploy a trained AI model from SageMaker, you typically follow these steps:

- Use the SageMaker hosting services to create an endpoint for the model.

- Configure the endpoint settings such as instance type and number of instances to meet the deployment requirements.

- Deploy the model to the endpoint and start serving predictions.

Deployment Options in SageMaker

SageMaker offers various deployment options to meet different use cases and requirements:

- Real-time Endpoint: Deploy the model for real-time inference on new data.

- Batch Transform: Perform batch predictions on a large dataset using SageMaker batch transform.

- Edge Deployment: Deploy the model to edge devices for offline inference.

Monitoring and Scaling Deployed Models

Monitoring and scaling deployed models in SageMaker is essential to ensure optimal performance and reliability. Best practices include:

- Set up monitoring to track model performance, data drift, and resource utilization.

- Implement auto-scaling to adjust the number of instances based on traffic demand.

- Regularly review logs and metrics to identify and address any issues proactively.

Integration with External Applications or Services

Integrating SageMaker models with external applications or services can extend the functionality and usability of the models. This can be achieved by:

- Using SageMaker SDKs or APIs to interact with the deployed model from external applications.

- Leveraging AWS Lambda functions or API Gateway for seamless integration with external services.

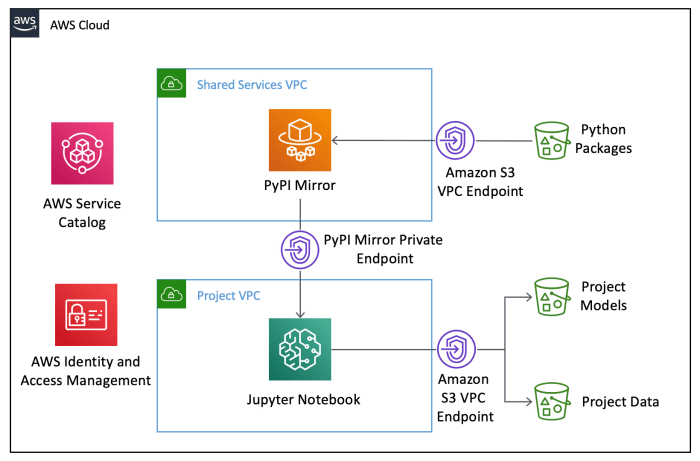

- Implementing security measures such as IAM roles and VPC endpoints to ensure secure communication between SageMaker and external systems.

Cost optimization and scalability in SageMaker: Building AI Models In SageMaker

When working on AI models in SageMaker, it is essential to consider cost optimization and scalability to ensure efficient resource management and effective deployment of AI solutions.

Tips for optimizing costs in SageMaker

- Utilize Spot Instances: Take advantage of Spot Instances in SageMaker to reduce costs by up to 90% compared to On-Demand Instances.

- Right-size Instances: Choose the appropriate instance type and size for your workloads to avoid over-provisioning and unnecessary expenses.

- Monitor Resource Utilization: Keep track of resource usage and optimize as needed to prevent idle resources and reduce costs.

- Use Cost Explorer: Leverage AWS Cost Explorer to analyze and visualize costs associated with SageMaker usage, enabling better cost management and optimization.

Strategies for scaling AI model development in SageMaker

- Parallel Processing: Implement parallel processing techniques to distribute workloads efficiently and expedite AI model development.

- Automated Pipelines: Set up automated pipelines in SageMaker to streamline the development process and scale AI model training and deployment effectively.

- Model Versioning: Maintain version control of AI models in SageMaker to facilitate scalability and ensure seamless updates and deployments.

Leveraging SageMaker’s capabilities for cost-effective and scalable AI solutions

- Use Managed Services: Take advantage of SageMaker’s managed services for data preparation, model training, and deployment to optimize costs and scalability.

- Containerization: Containerize AI models in SageMaker to enhance portability, scalability, and cost efficiency by leveraging container-based deployment.

- Serverless Architectures: Implement serverless architectures in SageMaker to scale resources dynamically based on workload demands and minimize costs associated with idle resources.

Managing resources efficiently in SageMaker

- Resource Tagging: Tag resources in SageMaker to categorize and track usage, enabling better cost allocation and resource management.

- Cost Allocation Reports: Generate cost allocation reports in SageMaker to analyze spending patterns, identify cost drivers, and optimize resource utilization for cost efficiency.

- Resource Cleanup: Regularly clean up unused resources in SageMaker to eliminate unnecessary costs and maintain a lean infrastructure for efficient AI model development.

In conclusion, Building AI models in SageMaker opens up a world of possibilities for developers and data scientists alike. By following the guidelines Artikeld in this guide, you can navigate the complexities of AI model development with confidence and precision, ultimately driving innovation and success in your projects.

When dealing with big data, having scalable cloud storage is essential for seamless operations. Companies can rely on scalable cloud storage for big data to ensure flexibility and efficiency in handling large volumes of information.

Choosing between AWS Glue and EMR for big data processing can be a tough decision. While AWS Glue vs EMR for big data offer different features, understanding the specific needs of your project is crucial for making the right choice.

Amazon Kinesis provides a powerful platform for real-time data streaming and analytics. With various Amazon Kinesis use cases , businesses can leverage this service for a wide range of applications, from IoT to social media monitoring.