Data science in AWS SageMaker opens up a world of possibilities for streamlining machine learning projects, offering a comprehensive suite of tools and features to enhance the data science workflow. Dive into the realm of AWS SageMaker and discover how it revolutionizes the way data scientists approach model building and deployment.

Overview of AWS SageMaker

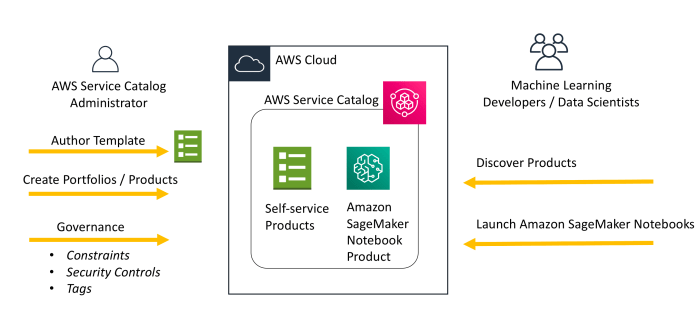

AWS SageMaker is a comprehensive machine learning service provided by Amazon Web Services (AWS) that simplifies the process of building, training, and deploying machine learning models. It offers a wide range of tools and features to support data scientists in their projects.

Core Functionalities of AWS SageMaker

AWS SageMaker provides a variety of core functionalities that are essential for data science projects:

- Build: SageMaker offers a range of algorithms and frameworks to build machine learning models, allowing users to experiment with different approaches.

- Train: Users can easily train their models using scalable compute resources in the cloud, enabling faster training times for large datasets.

- Deploy: Once the model is trained, SageMaker facilitates the deployment of models into production environments, making it seamless for real-world applications.

Integration into Data Science Workflow

AWS SageMaker fits into the data science workflow by providing an end-to-end platform for machine learning projects. It streamlines the process from data preparation to model deployment, allowing data scientists to focus on building and improving models rather than managing infrastructure.

Key Features of AWS SageMaker

- Automatic Model Tuning: SageMaker automates the process of hyperparameter tuning to optimize model performance.

- Managed Notebooks: SageMaker provides Jupyter notebooks that are fully managed and can be easily shared among team members for collaboration.

- Model Monitoring: SageMaker offers built-in tools for monitoring model performance and detecting drift in real-time.

Data Preparation in AWS SageMaker: Data Science In AWS SageMaker

Data preparation is a crucial step in the data science workflow, as it involves cleaning, transforming, and organizing data to ensure its suitability for analysis in AWS SageMaker.

Data Preprocessing Capabilities, Data science in AWS SageMaker

AWS SageMaker provides a range of data preprocessing capabilities to help users prepare their datasets effectively. This includes built-in algorithms for feature engineering, scaling, and normalization, as well as tools for handling missing values and outliers.

Tools for Data Cleaning and Transformation

– SageMaker Processing: Allows users to run data preprocessing tasks in a scalable and cost-effective manner using managed infrastructure.

– Jupyter Notebooks: Users can leverage Jupyter Notebooks to interactively clean and transform data before training machine learning models.

– Amazon Glue: Offers ETL (Extract, Transform, Load) capabilities to prepare and organize data for analysis in SageMaker.

Importing and Exporting Data

– Data Import: Users can import data into SageMaker from various sources such as Amazon S3, Amazon RDS, and Amazon Redshift.

– Data Export: Exporting processed data from SageMaker is straightforward, with options to save data back to Amazon S3 or other storage services for further analysis.

Model Building in AWS SageMaker

When it comes to building machine learning models in AWS SageMaker, there are various algorithms and models supported to cater to different use cases and requirements. In this section, we will delve into the details of the algorithms, training process, and optimization techniques within AWS SageMaker.

Algorithms and Models Supported by AWS SageMaker

AWS SageMaker provides a wide range of built-in algorithms and models that cover various machine learning tasks such as regression, classification, clustering, and more. Some of the commonly used algorithms supported by SageMaker include:

- Linear Learner

- XGBoost

- Random Cut Forest

- K-Means

- DeepAR

Training a Machine Learning Model using AWS SageMaker

Training a machine learning model in AWS SageMaker involves setting up the training data, selecting the appropriate algorithm, configuring the training job parameters, and launching the training process. The steps to train a model in SageMaker typically include:

- Preparing the training data and storing it in an S3 bucket

- Defining the algorithm and model hyperparameters

- Configuring the training job settings such as instance type and number of instances

- Launching the training job and monitoring the progress

Optimizing and Tuning Models in AWS SageMaker

Once the initial training is complete, it is essential to optimize and fine-tune the models to improve their performance and accuracy. AWS SageMaker provides capabilities for hyperparameter tuning, automatic model tuning, and model optimization to enhance the model’s predictive power. The process of optimizing and tuning models in SageMaker typically involves:

- Defining the hyperparameter ranges and tuning strategy

- Launching the hyperparameter tuning job

- Tracking and analyzing the tuning results to identify the best-performing model

- Deploying the optimized model for inference

Deployment and Management in AWS SageMaker

When it comes to deploying and managing models in AWS SageMaker, there are specific steps and features that are crucial for ensuring the success of your machine learning projects.

Deploying a Model for Real-Time Predictions

- Once your model is trained and ready, you can deploy it in SageMaker for real-time predictions by creating an endpoint.

- This endpoint allows you to send new data to the model and receive predictions quickly and efficiently.

- You can easily monitor the performance of your deployed model through the SageMaker console, which provides real-time metrics and logs.

Monitoring and Management Features in AWS SageMaker

- AWS SageMaker offers built-in monitoring capabilities that allow you to track the performance of your models and detect any anomalies or drift in the data.

- You can set up alarms and notifications to alert you when there are issues with your deployed models, ensuring timely intervention.

- The Model Monitor feature in SageMaker continuously evaluates the quality of your models and provides insights for improvement.

Scaling and Updating Models in Production

- Scaling your models in production is easy with SageMaker, as you can adjust the compute resources based on the workload and demand.

- When it comes to updating models, SageMaker allows you to deploy new versions seamlessly without disrupting the existing production environment.

- You can use automated pipelines to update and retrain models on new data, ensuring that your predictions stay accurate and up-to-date.

In conclusion, AWS SageMaker stands as a powerful ally for data scientists, providing a seamless environment for data preparation, model building, and deployment. Embrace the potential of AWS SageMaker to elevate your machine learning endeavors to new heights.

When it comes to managing big data, AWS offers a range of Machine Learning services like AWS ML services for big data. These services provide advanced tools and algorithms to analyze and extract insights from massive datasets efficiently.

For those looking to dive deeper into Machine Learning, AWS SageMaker is a powerful platform to build, train, and deploy models at scale. Learn more about Machine learning with AWS SageMaker and how it can streamline your ML workflows.

Optimizing performance is crucial for any data warehouse, and AWS Redshift is no exception. Discover best practices and techniques for AWS Redshift performance optimization to ensure your queries run smoothly and efficiently.