Kicking off with Deploying ML models on AWS, this guide dives into the intricacies of deploying machine learning models on Amazon Web Services, uncovering the essential steps and best practices for a seamless deployment process.

From preparing ML models for deployment to exploring AWS SageMaker and managing deployed models, this comprehensive overview is your go-to resource for mastering ML model deployment on AWS.

Overview of Deploying ML Models on AWS

Deploying machine learning (ML) models on AWS involves the process of making trained ML models available for use in real-world applications by hosting them on Amazon Web Services (AWS) infrastructure.

When it comes to backup solutions, AWS S3 is a popular choice among businesses due to its scalability and cost-effectiveness. With AWS S3 backup solutions , companies can securely store their data and easily retrieve it when needed.

Using AWS for deploying ML models offers several advantages, including scalability, flexibility, cost-effectiveness, and ease of integration with other AWS services. AWS provides a wide range of tools and services specifically designed for ML model deployment, making it a popular choice among data scientists and developers.

For handling big data ETL processes on AWS, there are several powerful tools available. These Big data ETL tools on AWS offer efficient data transformation capabilities, allowing organizations to process large volumes of data quickly and accurately.

Benefits of Leveraging AWS Services for ML Model Deployment

- Scalability: AWS allows for easy scaling of resources to accommodate varying workloads, ensuring optimal performance for deployed ML models.

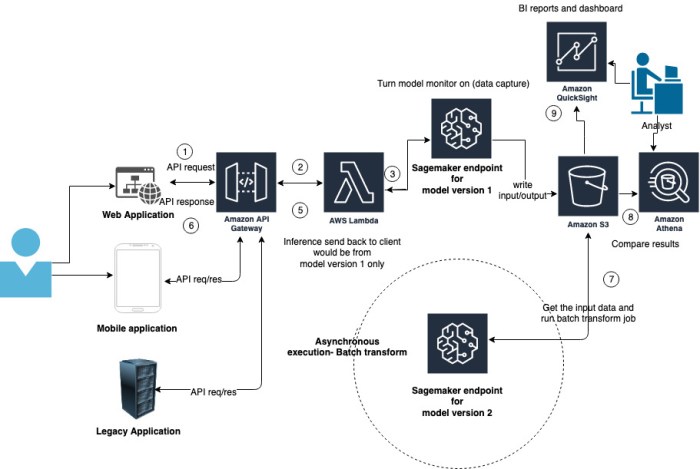

- Flexibility: With AWS, developers can choose from a variety of deployment options, such as Amazon SageMaker, AWS Lambda, or Amazon ECS, based on their specific needs and requirements.

- Cost-effectiveness: AWS offers pay-as-you-go pricing models, allowing organizations to only pay for the resources they use, making it a cost-effective solution for deploying ML models.

- Integration with other AWS services: AWS provides seamless integration with other services like Amazon S3, Amazon Redshift, and Amazon RDS, enabling data scientists to build end-to-end ML pipelines effortlessly.

Preparing ML Models for Deployment on AWS

Before deploying ML models on AWS, it is crucial to prepare them properly to ensure optimal performance and functionality. This involves several key steps and considerations.

Data Preprocessing

Data preprocessing plays a vital role in preparing ML models for deployment on AWS. It involves cleaning, transforming, and organizing the data to make it suitable for training and inference. Some common data preprocessing tasks include handling missing values, encoding categorical variables, scaling features, and splitting the data into training and testing sets.

When it comes to storing big data files on AWS, businesses can rely on the robust infrastructure provided by Amazon. With AWS big data file storage , companies can securely store and manage their data, ensuring easy access and high levels of reliability.

Model Training and Evaluation

Prior to deployment on AWS, ML models need to be trained and evaluated thoroughly to ensure their accuracy and reliability. This involves selecting appropriate algorithms, tuning hyperparameters, and assessing the model’s performance using metrics like accuracy, precision, recall, and F1 score.

Model Serialization

Once the ML model is trained and evaluated, it needs to be serialized into a format that can be easily deployed and used for inference. Common serialization formats include pickle, JSON, and ONNX, which allow the model to be saved and loaded efficiently on AWS.

Integration with AWS Services, Deploying ML models on AWS

Finally, the ML model needs to be integrated with AWS services such as Amazon SageMaker, AWS Lambda, or Amazon EC2 for deployment. These services provide scalable infrastructure, monitoring, and management capabilities to ensure the successful deployment and operation of ML models on AWS.

Deploying ML Models on AWS SageMaker

AWS SageMaker is a fully managed service that allows data scientists and developers to build, train, and deploy machine learning models quickly and easily. It provides a complete environment for developing and deploying ML models, including data processing, model training, and model hosting.

AWS SageMaker Service Overview

Deploying ML models on AWS SageMaker offers several advantages compared to other AWS services:

- Integrated Environment: SageMaker provides a seamless workflow for training and deploying ML models, eliminating the need to manage separate services for each step of the process.

- Scalability: SageMaker can easily scale to handle large datasets and high computational loads, ensuring optimal performance for deployed models.

- Cost-Effectiveness: With SageMaker’s pay-as-you-go pricing model, users only pay for the resources they use, making it a cost-effective option for deploying ML models.

Deploying an ML Model on AWS SageMaker: Step-by-Step Guide

- Prepare Your Model: Before deploying your ML model on SageMaker, make sure it is trained and optimized for deployment.

- Create a Model Package: Package your trained model using SageMaker’s Model Package format, which includes the model artifacts, inference code, and other necessary files.

- Set Up an Endpoint Configuration: Configure an endpoint in SageMaker to deploy your model, specifying the instance type, number of instances, and other deployment settings.

- Deploy Your Model: Deploy your model to the configured endpoint, making it accessible for real-time inference requests.

- Test Your Model: Verify that your deployed model is working correctly by sending test inference requests and evaluating the results.

Monitoring and Managing Deployed ML Models on AWS

Deploying machine learning models on AWS is just the first step in the process. It is equally important to monitor and manage these deployed models to ensure they are performing optimally and delivering accurate results. Monitoring and managing deployed ML models on AWS involves tracking their performance, making necessary adjustments, and ensuring they continue to meet the desired outcomes.

Importance of Monitoring Deployed ML Models on AWS

- Ensure model accuracy: Monitoring helps in detecting any drift or degradation in model performance, allowing for timely intervention to maintain accuracy.

- Identify anomalies: Monitoring can help in identifying anomalies or unexpected behavior in the model, which may require investigation and corrective action.

- Compliance and governance: Monitoring ensures that deployed ML models comply with regulatory requirements and organizational policies, reducing risks related to non-compliance.

Best Practices for Managing and Maintaining ML Models on AWS

- Establish monitoring metrics: Define key performance indicators (KPIs) and monitoring metrics to track the model’s performance and behavior over time.

- Automate monitoring processes: Implement automated monitoring processes to regularly check the model’s performance and alert stakeholders in case of deviations.

- Regular model retraining: Schedule regular model retraining sessions to update the model with new data and ensure it stays relevant and accurate.

Tips for Optimizing the Performance of Deployed ML Models on AWS

- Optimize model architecture: Fine-tune the model architecture and hyperparameters to improve performance and efficiency.

- Utilize AWS Lambda for real-time inference: Use AWS Lambda for real-time inference to reduce latency and improve responsiveness of the deployed model.

- Implement caching mechanisms: Implement caching mechanisms to store frequently accessed data and reduce the computational load on the model.

In conclusion, mastering the deployment of ML models on AWS is crucial for maximizing the benefits of cloud-based machine learning. By following the Artikeld steps and leveraging AWS services effectively, you can optimize the performance of your deployed models and drive impactful results. Dive into the world of ML model deployment on AWS and unlock the full potential of your machine learning projects.